👑 Abstract

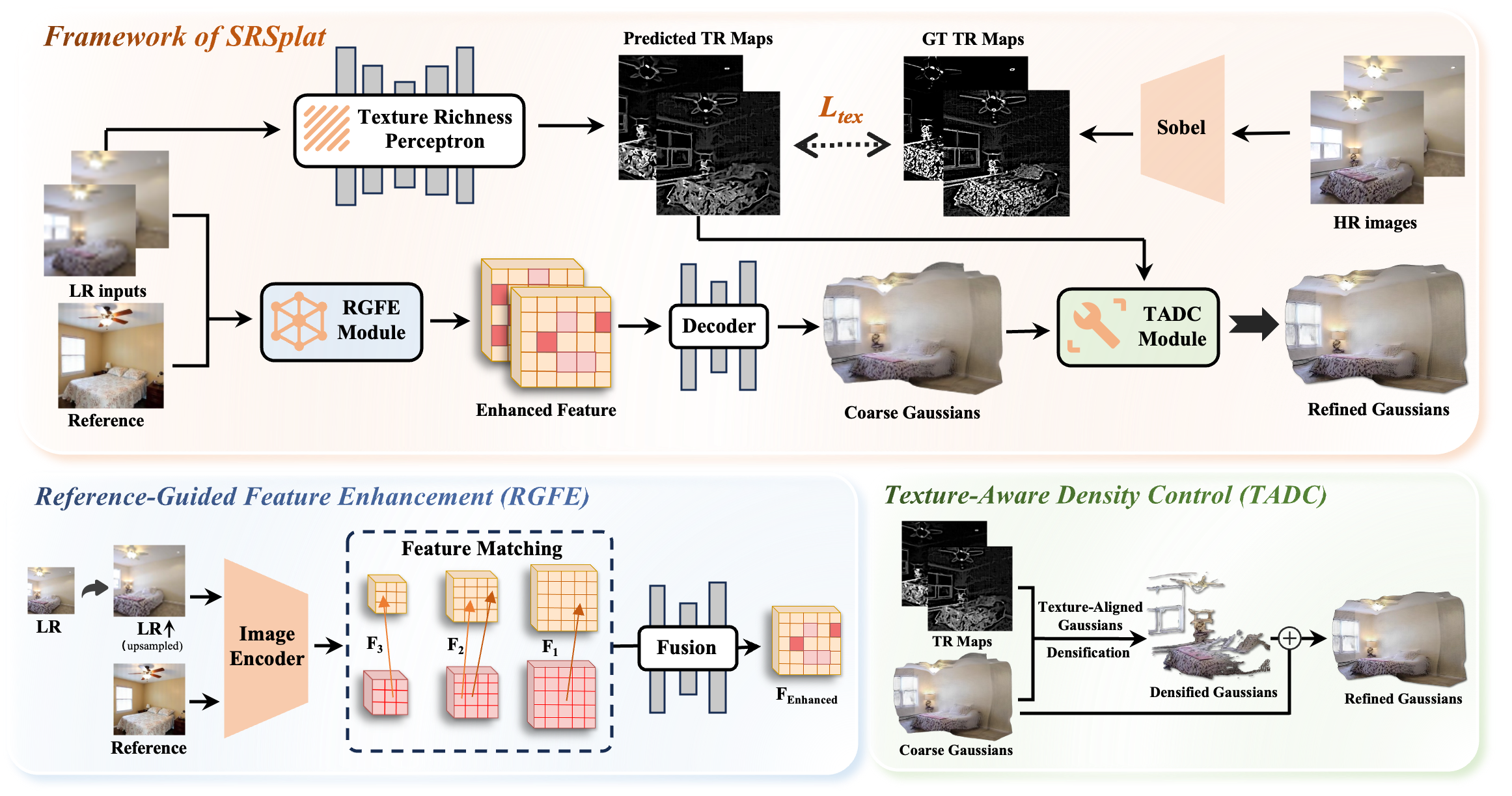

Feed-forward 3D reconstruction from sparse, low-resolution (LR) images is a crucial capability for real-world applications, such as autonomous driving and embodied AI. However, existing methods often fail to recover fine texture details. This limitation stems from the inherent lack of high-frequency information in LR inputs. To address this, we propose SRSplat, a feed-forward framework that reconstructs high-resolution 3D scenes from only a few LR views. Our main insight is to compensate for the deficiency of texture information by jointly leveraging external high-quality reference images and internal texture cues. We first construct a scene-specific reference gallery, generated for each scene using Multimodal Large Language Models (MLLMs) and diffusion models. To integrate this external information, we introduce the Reference-Guided Feature Enhancement (RGFE) module, which aligns and fuses features from the LR input images and their reference twin image. Subsequently, we train a decoder to predict the Gaussian primitives using the multi-view fused feature obtained from RGFE. To further refine predicted Gaussian primitives, we introduce Texture-Aware Density Control (TADC), which adaptively adjusts Gaussian density based on the internal texture richness of the LR inputs. Extensive experiments demonstrate that our SRSplat outperforms existing methods on various datasets, including RealEstate10K, ACID, and DTU, and exhibits strong cross-dataset and cross-resolution generalization capabilities.

📺 Method Explanation

🏃 We propose SRSplst, an Feed-Forward Super-Resolution framework from Sparse Multi-View Images (Anonymous YouTube Link: Video)

🔧 Framework Overview

In this work, we propose SRSplat, a novel feed-forward framework that reconstructs high-quality 3D scenes with only sparse and LR input views. SRSplat demonstrates superior performance and is capable of handling low-resolution, sparse-view inputs and real-time reconstruction, thereby offering greater functionality and practicality in realistic applications.